Leaked Data Reveals Massive Israeli Campaign to Remove Pro-Palestine Posts on Facebook and Instagram

A sweeping crackdown on posts on Instagram and Facebook that are critical of Israel—or even vaguely supportive of Palestinians—was directly orchestrated by the government of Israel, according to internal Meta data obtained by Drop Site News. The data show that Meta has complied with 94% of takedown requests issued by Israel since October 7, 2023. Israel is the biggest originator of takedown requests globally by far, and Meta has followed suit—widening the net of posts it automatically removes, and creating what can be called the largest mass censorship operation in modern history.

Government requests for takedowns generally focus on posts made by citizens inside that government’s borders, Meta insiders said. What makes Israel’s campaign unique is its success in censoring speech in many countries outside of Israel. What’s more, Israel's censorship project will echo well into the future, insiders said, as the AI program Meta is currently training how to moderate content will base future decisions on the successful takedown of content critical of Israel’s genocide.

The data, compiled and provided to Drop Site News by whistleblowers, reveal the internal mechanics of Meta’s “Integrity Organization”—an organization within Meta dedicated to ensuring the safety and authenticity on its platforms. Takedown requests (TDRs) allow individuals, organizations, and government officials to request the removal of content that allegedly violates Meta’s policies. The documents indicate that the vast majority of Israel’s requests—95%—fall under Meta’s “terrorism” or “violence and incitement” categories. And Israel’s requests have overwhelmingly targeted users from Arab and Muslim-majority nations in a massive effort to silence criticism of Israel.

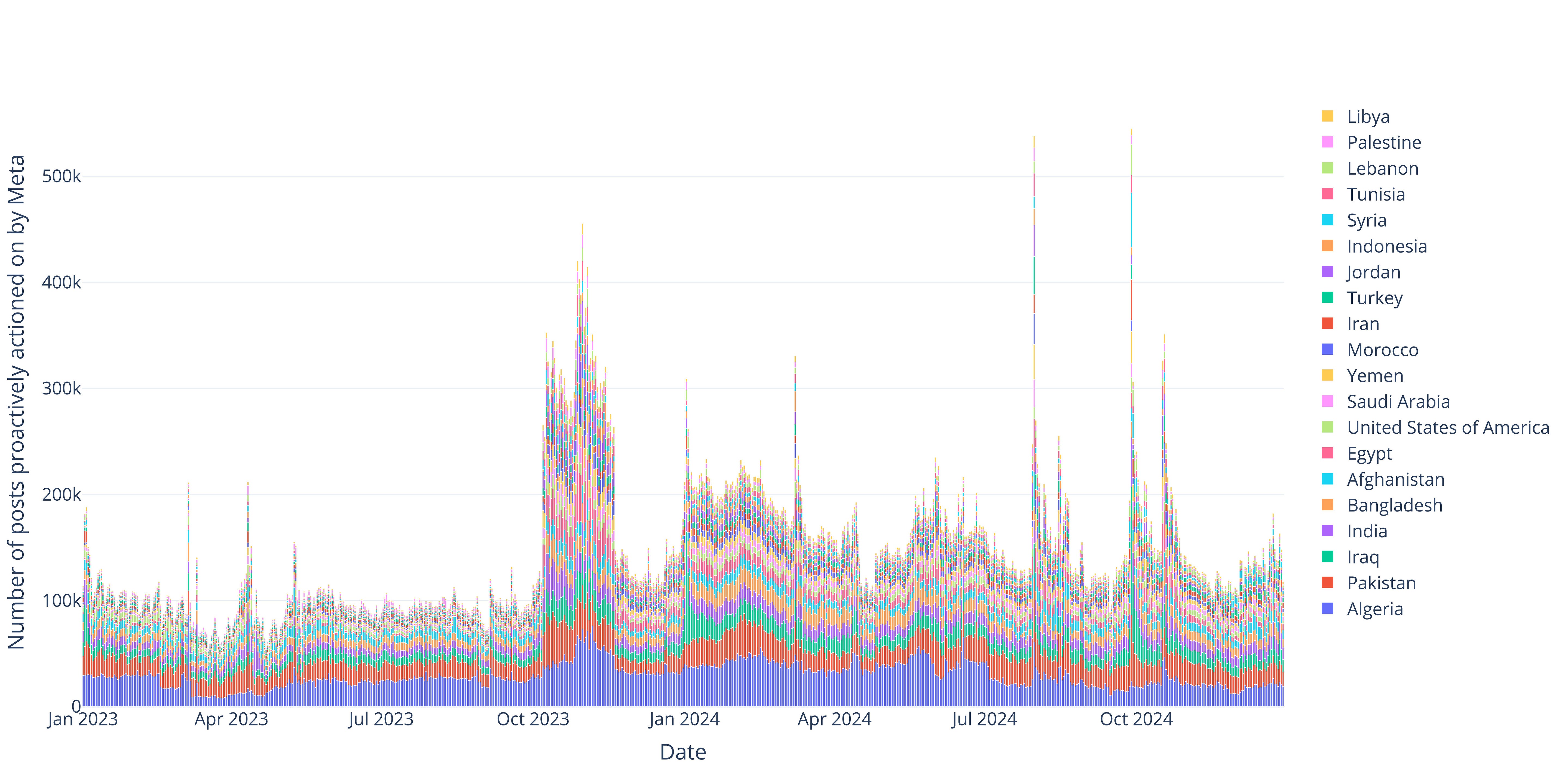

Multiple independent sources inside Meta confirmed the authenticity of the information provided by the whistleblowers. The data also show that Meta removed over 90,000 posts to comply with TDRs submitted by the Israeli government in an average of 30 seconds. Meta also significantly expanded automated takedowns since October 7, resulting in an estimated 38.8 million additional posts being “actioned upon” across Facebook and Instagram since late 2023. “Actioned upon” in Facebook terms means that a post was either removed, banned, or suppressed.

Takedown Requests

All of the Israeli government’s TDRs post-October 7th contain the exact same complaint text, according to the leaked information, regardless of the substance of the underlying content being challenged. Sources said that not a single Israeli TDR describes the exact nature of the content being reported, even though the requests link to an average of 15 different pieces of content. Instead, the reports simply state, in addition to a description of the October 7th attacks, that:

This is an urgent request regarding videos posted on Facebook which contain inciting content. The file attached to this request contains link [sic] to content which violated articles 24(a) and 24(b) of the Israeli Counter-Terrorism Act (2016), which prohibits incitement to terrorism praise for acts of terrorism and identification or support of terror organizations. Moreover, several of the links violate article 2(4) of the Privacy Protection Act (1982), which prohibits publishing images in circumstances that could humiliate the person depicted, as they contain images of the killed, injured, and kidnapped. Additionally, to our understanding, the content in the attached report violates Facebook’s community standards.

Meta's content enforcement system processes user-submitted reports through different pathways, depending on who is reporting it. Regular users can report posts via the platform’s built-in reporting function, triggering a review. Reported posts are typically first labeled as violating or non-violating by machine-learning models, though sometimes human moderators review them as well. If the AI assigns a high confidence score indicating a violation, the post is removed automatically. If the confidence score is low, human moderators review the post before deciding whether to take action.

Governments and organizations, on the other hand, have privileged channels to trigger content review. Reports submitted through these channels receive higher priority and are almost always reviewed by human moderators rather than AI. Once reviewed by humans, the reviews are fed back into Meta’s AI system to help it better assess similar content in the future. While everyday users can also file TDRs, they are rarely acted upon. Government-submitted TDRs are far more likely to result in content removal.

Meta has overwhelmingly complied with Israel’s requests, making an exception for the government account by taking down posts without human reviews, according to the whistleblowers, while still feeding that data back into Meta’s AI. A Human Rights Watch (HRW) report investigating Meta’s moderation of pro-Palestine content post-October 7th found that, of 1,050 posts HRW documented as taken-down or suppressed on Facebook or Instagram, 1,049 involved peaceful content in support of Palestine, while just one post was content in support of Israel.

A source within Meta’s Integrity Organization confirmed that internal reviews of their automated moderation found that pro-Palestinian content that did not violate Meta’s policies was frequently removed. In other cases, pro-Palestinian content that should have been simply removed was given a “strike,” which indicates a more serious offense. Should a single account receive too many strikes on content that it publishes, the entire account can be removed from Meta platforms.

When concerns about overenforcement against pro-Palestinian content were raised inside the Integrity Organization, the source said, leadership responded by saying that they preferred to overenforce against potentially violating content, rather than underenforce and risk leaving violating content live on Meta platforms.

Remove, Strike, Suspend

Within Meta, several key leadership positions are filled by figures with personal connections to the Israeli government. The Integrity Organization is run by Guy Rosen, a former Israeli military official who served in the Israeli military’s signals intelligence unit, Unit 8200. Rosen was the founder of Onavo, a web analytics and VPN firm that then-Facebook acquired in October 2013. (Previous reporting has revealed that, prior to acquiring the company, Facebook used data Onavo collected from their VPN users to monitor the performance of competitors—part of the anti-competitive behavior alleged by the Federal Trade Commission under the Biden administration in its suit against Meta.)

Rosen’s Integrity Organization works synergistically with Meta’s Policy Organization, according to employees. The Policy Organization sets the rules, and the Integrity Organization enforces them—but the two feed one another, they said. “Policy changes are often driven by data from the integrity org,” explained one Meta employee. As of this year, Joel Kaplan replaced Nick Clegg as the head of the Policy Organization. Kaplan is a former Bush administration official who has worked with Israeli officials in the past on fighting “online incitement.”

Meta’s Director of Public Policy for Israel and the Jewish Diaspora, Jordana Cutler, has also intervened to investigate pro-Palestine content. Cutler is a former senior Israeli government official and advisor to Prime Minister Benjamin Netanyahu. Cutler has reportedly used her role to flag pro-Palestine content. According to internal communications reviewed by Drop Site, as recently as March, Cutler actively instructed employees of the company to search for and review content mentioning Ghassan Kanafani, an Arab novelist considered to be a pioneer of Palestinian literature. Immediately prior to joining Meta as a senior policymaker, she spent nearly three years as Chief of Staff at the Israeli Embassy in Washington, D.C—and nearly five years serving as deputy to one of Netanyahu’s senior advisors, before becoming Netanyahu’s advisor on Diaspora Affairs.

According to internal information reviewed by Drop Site, Cutler has continued to demand the review of content related to Kanafani under Meta’s policy “Glorification, Support or Representation” of individuals or organizations “that proclaim a violent mission or are engaged in violence to have a presence on our platforms.” Kanafani, who was killed in a 1972 car bombing orchestrated by the Mossad, served as a spokesperson for the left-wing Palestinian nationalist group, the Popular Front for the Liberation of Palestine (PFLP). The PFLP was designated as a terrorist group over a quarter century after he was killed, which, according to Meta’s guidelines and Cutler’s efforts, serves as a basis to flag his content for removal, strikes, and possible suspension.

Global Scope

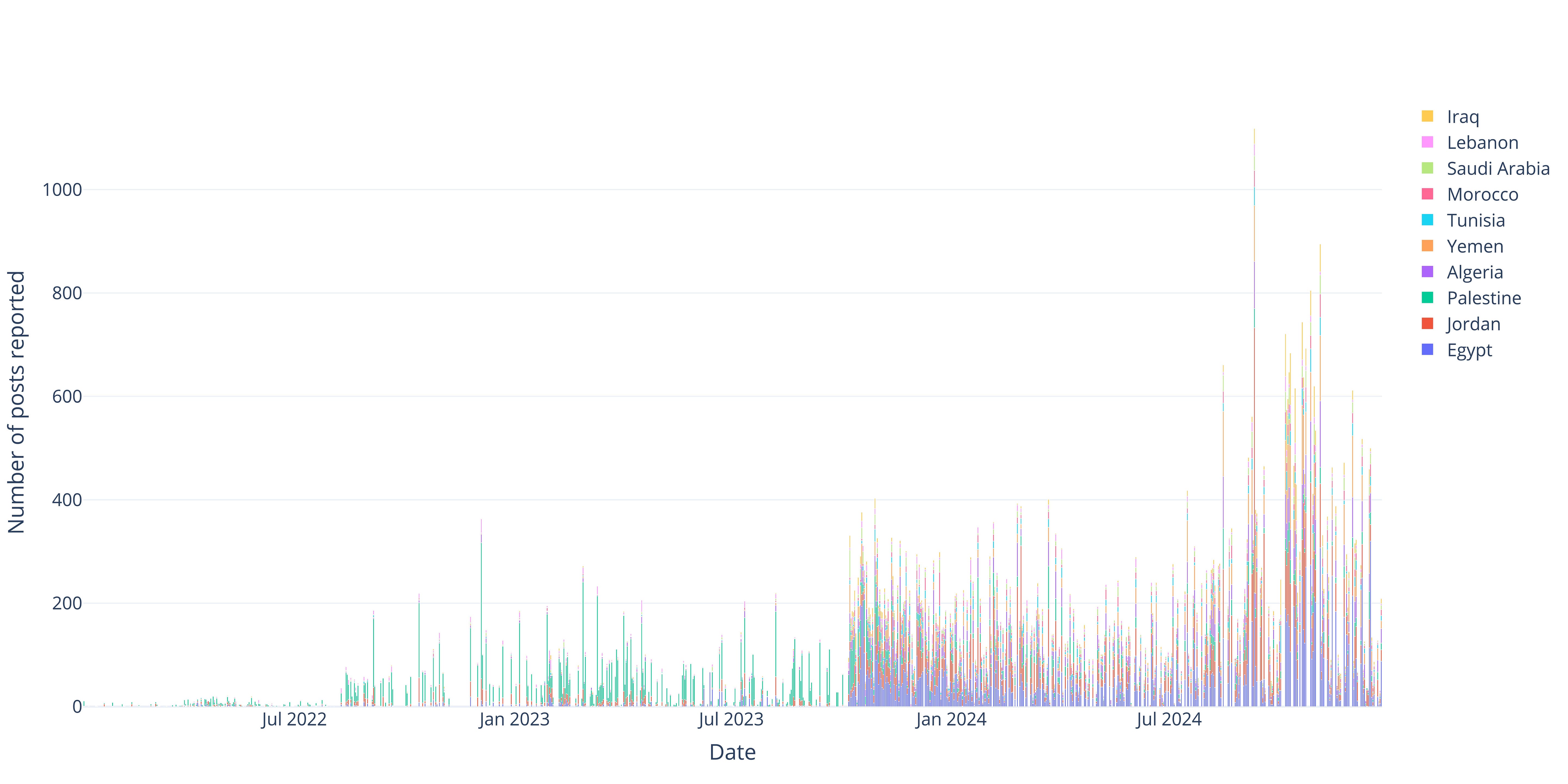

The leaked documents reveal that Israel’s takedown requests have overwhelmingly targeted users from Arab and Muslim-majority nations, with the top 12 countries affected being: Egypt (21.1%), Jordan (16.6%), Palestine (15.6%), Algeria (8.2%), Yemen (7.5%), Tunisia (3.3%), Morocco (2.9%), Saudi Arabia (2.7%), Lebanon (2.6%), Iraq (2.6%), Syria (2%), Turkey (1.5%). In total, users from over 60 countries have reported censorship of content related to Palestine, according to Human Rights Watch—with posts being removed, accounts suspended, and visibility reduced through shadow banning.

Notably, only 1.3% of Israel’s takedown requests target Israeli users, making Israel an outlier among governments that typically focus their censorship efforts on their own citizens. For example, 63% of Malaysia’s takedown requests target Malaysian content, and 95% of Brazil’s requests target Brazilian content. Israel, however, has turned its censorship efforts outward, focusing on silencing critics and narratives that challenge its policies, particularly in the context of the ongoing conflict in Gaza and the West Bank.

Despite Meta’s awareness of Israel’s aggressive censorship tactics for at least seven years, according to Meta whistleblowers, the company has failed to curb the abuse. Instead, one said, the company “actively provided the Israeli government with a legal entry-point for carrying out its mass censorship campaign.”

It ain't working. This is an act of desperation. Those who seek to imprison ideas will always fail, because the ideas they seek to censor will always escape, and become more powerful in the process. The universe of thought functions according to different laws than the world of matter, and materialist tactics don't don't kill ideas. An idea is not a piece of real estate that you can build a fence around, much less a corporeal body that can be tossed into a prison cell.

"You can't have a better press agent than a censor." -John Waters

Muchas Gracias to the entire Drop Site team. Alf Shukr!

Scandal. We know corporations cannot be trusted to protect American's free speech. Currently there are no laws to force social media companies to respect free speech of their users. Are there any laws to prevent foreign actors from influencing said corporations to suppress speech?

or laws to punish companies that work with foreign actors to do so? or punish companies that work with domestic governments to violate the constitution? Thank you DropSite.